Create a retrieval augmented generation (RAG) application by using LlamaIndex and large language models (LLMs) to enhance information retrieval and generation. By integrating data retrieval with Granite LLM-powered content generation, you’ll enable intuitive querying and information retrieval from diverse document sources such as PDF, HTML, and txt files. This approach simplifies complex document interactions, making it easier to build powerful, context-aware applications that deliver accurate and relevant information.

In today’s fast-paced research environment, the sheer volume of scientific papers can be overwhelming, making it nearly impossible to stay up-to-date with the latest developments. Researchers and professionals often struggle to find the time to sift through countless documents, let alone extract the most relevant and insightful information. But what if there was an AI assistant that could do this for you—helping you read, understand, and summarize vast amounts of data, all in real time?

Enter retrieval augmented generation (RAG), a cutting-edge approach that combines the power of large language models (LLMs) with advanced retrieval techniques. RAG enhances the capabilities of traditional LLMs by allowing them to incorporate the most relevant and specific information from diverse data sources. Whether you need the latest research, detailed reports, or up-to-date documentation, a RAG-powered application can ensure that every response is precise, informed, and contextually relevant.

In this guided project, you develop an AI assistant that’s capable of providing expert-level, real-time answers to complex user queries drawn from your own diverse documents. Using LlamaIndex as the backbone, you’ll build a RAG application that pulls in the most relevant information, ensuring that your assistant delivers accurate and timely insights. By the end of this project, you are equipped to create systems that merge advanced retrieval techniques with LLMs, enabling responses that are not only contextually aware but also incredibly accurate and up-to-date—making a significant difference in how you process and engage with scientific literature.

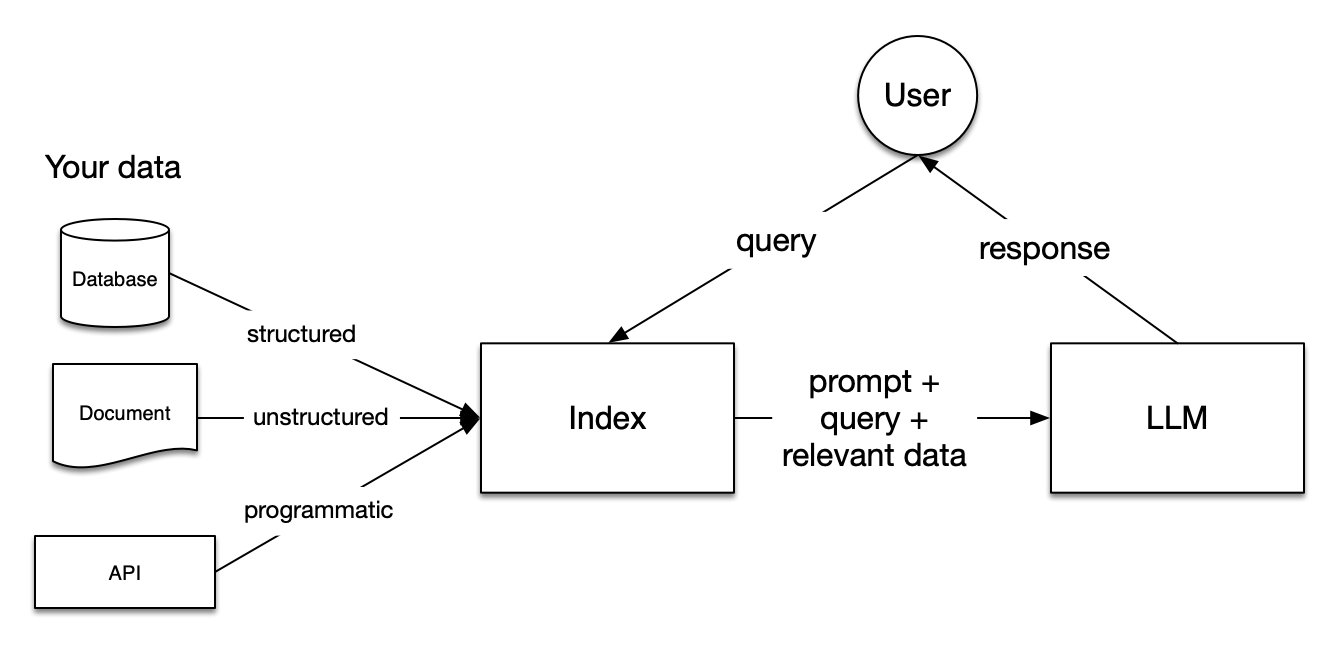

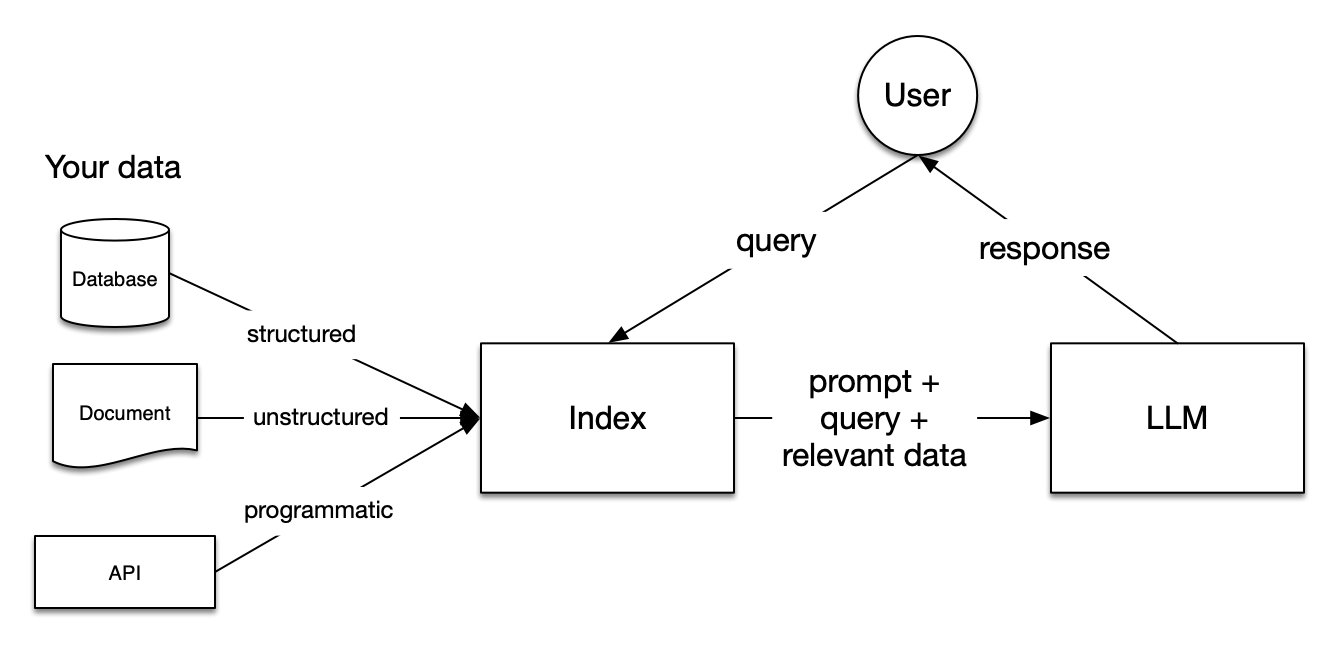

RAG framework (source: LlamaIndex)

A look at the project ahead

By the end of the project, you will have the knowledge and skills to:

- Construct a RAG application: Use LlamaIndex to build a RAG application that efficiently retrieves information from various document sources.

- Load, index, and retrieve data: Master the techniques of loading, indexing, and retrieving data to ensure that your application accesses the most relevant information.

- Enhance querying techniques: Integrate LlamaIndex into your applications to improve querying techniques, ensuring that the responses are precise, contextually aware, and aligned with the most current data available.

What you’ll need

Before starting this guided project, ensure that you have a basic understanding of Python programming and familiarity with working in a Jupyter Notebook environment. You’ll also benefit from some prior exposure to concepts related to machine learning, natural language processing (NLP), and vector embeddings.

The IBM Skills Network Labs environment provides a ready-to-use setup with essential tools, so you won’t need to worry about complex installations. For the best experience, please use a current version of Chrome, Edge, Firefox, Internet Explorer, or Safari.

There are no reviews yet.